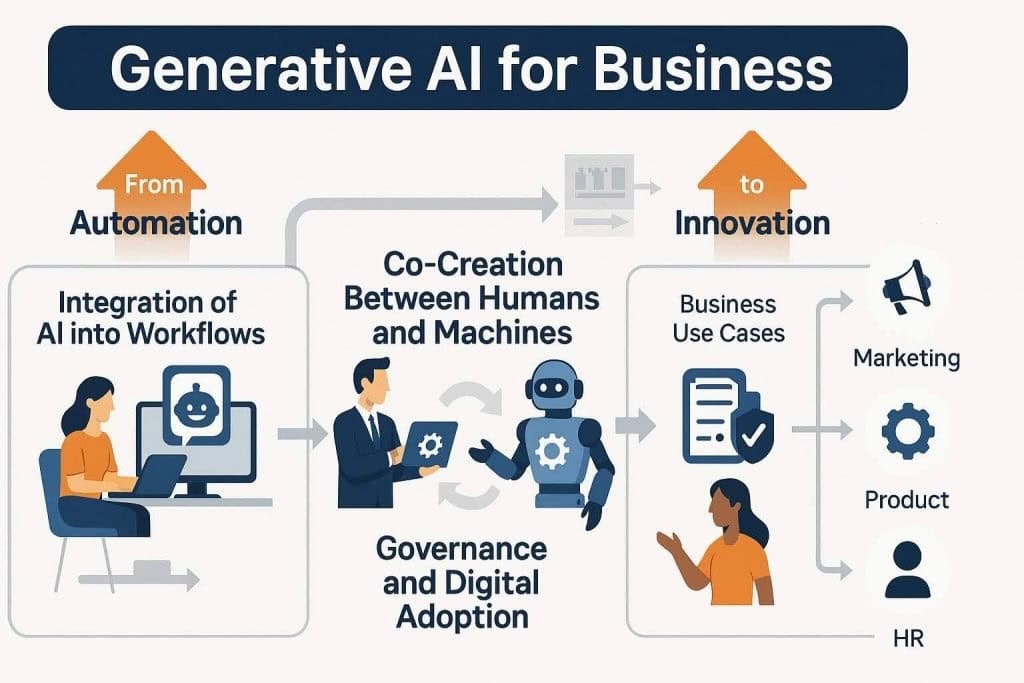

In the past few years, the enterprise AI landscape has shifted from automation-focused tools to generative systems capable of producing new content, ideas, and even strategic insights. As someone who has worked deeply across both technical implementation and business transformation initiatives, I can say with confidence that we are no longer simply using AI to reduce operational cost. We are entering a phase where generative AI for business is becoming a central driver of value creation and innovation.

This evolution is not just about technology. It is about rethinking the architecture of work, decision-making, and organizational design. Businesses are beginning to recognize that leveraging generative AI requires far more than deploying tools. It involves a layered strategy that includes model governance, infrastructure readiness, and human-centric adoption. Those who treat generative AI as a bolt-on solution will struggle to see sustained impact. Those who integrate it into the fabric of their workflows and talent development programs will gain a significant competitive edge.

In this article, I will explore how generative AI is transforming the way businesses operate, the core infrastructure that underpins these technologies, and how leaders can drive meaningful transformation. I will also address the critical, and often overlooked, aspect of digital adoption. Without real-world usage by employees and stakeholders, even the most powerful generative tools fail to deliver value. This is where digital adaption platforms like, which enable contextual, real-time guidance for end users, become essential.

Let’s explore the key shifts that are shaping the generative AI journey for enterprise teams today.

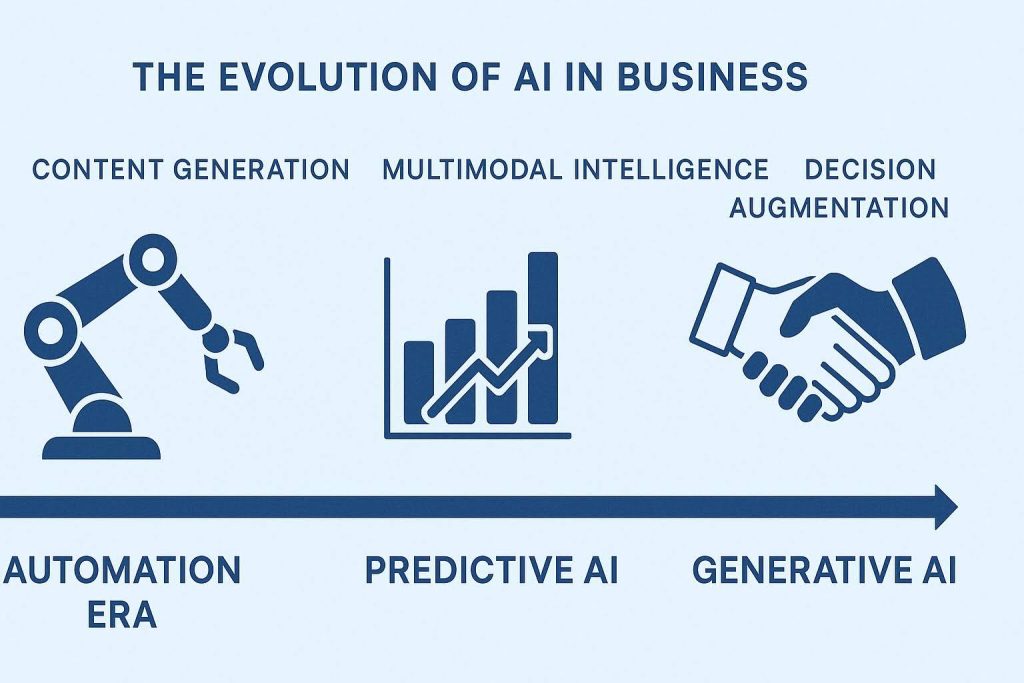

Traditional enterprise AI has long been rooted in prediction. We’ve spent the last decade deploying machine learning systems that excel at classification, forecasting, and anomaly detection. These tools have delivered significant gains in operational efficiency, particularly in areas like fraud detection, churn analysis, and demand forecasting. However, the outputs of these systems have always been limited to what they can infer from past data. They were powerful, but reactive.

Generative AI introduces a fundamentally different capability. Instead of merely analyzing historical data to predict outcomes, generative systems can create entirely new content. This includes text, images, code, audio, and even synthetic datasets. What makes this shift profound is that we’re no longer bound by past patterns. Instead, we can now synthesize new ideas and artifacts, enabling co-creative processes between humans and machines.

This capability is powered by large-scale foundation models, such as OpenAI’s GPT-4, Meta’s LLaMA, and others. These models are trained on a vast corpora of multimodal data and can perform tasks that previously required expert human judgment. They can draft legal memos, generate code snippets, produce personalized marketing content, and simulate business scenarios with remarkable fluency. The implications for enterprise productivity and creativity are immense.

Another defining characteristic of generative AI is its ability to operate across multiple modalities. Text-to-image models, audio synthesis tools, and code generation engines are all part of this ecosystem. This opens the door to richer applications that integrate voice, text, visuals, and structured data. We are beginning to see AI systems that can read a product spec, generate an instructional video, and create associated training material, all from a single prompt.

Generative AI is also increasingly context-aware. Through techniques like Retrieval Augmented Generation (RAG), models can access enterprise-specific knowledge bases, enabling them to generate outputs that are not only grammatically correct but also organizationally relevant. This is where generative AI begins to move from novelty to necessity in a business environment.

In marketing, generative AI for business is enabling hyper-personalization at scale. We can now auto-generate campaign assets tailored to individual customer segments, A/B test ideas before launch, and localize content instantly. AI-generated video scripts, social media captions, and even product descriptions are being deployed across global marketing operations. More importantly, these outputs can align with brand voice and compliance rules when integrated with internal knowledge systems.

Customer service is also undergoing a transformation. AI-powered virtual agents can now handle complex queries with nuanced language understanding. When combined with real-time knowledge retrieval, these systems reduce resolution times and enhance customer satisfaction. Instead of replacing human agents, generative tools act as real-time co-pilots, surfacing relevant information, suggesting responses, and handling routine tasks autonomously.

Generative AI is accelerating product development across industries. Engineers are using code generation tools to write boilerplate code, debug existing systems, and even refactor legacy software. In hardware design, generative models can suggest improvements based on performance goals, cost constraints, or material availability.

One of the most exciting frontiers is the use of synthetic data to simulate edge cases or accelerate machine learning pipelines. Rather than waiting months to gather real-world sensor data, teams can use generative tools to produce test environments in days. This compresses time-to-market and enables more robust product experimentation.

Generative AI is making inroads into operations through smart documentation and automated compliance. Business teams are using AI to draft contracts, policy documents, and internal communications. By feeding models with institutional templates and domain-specific knowledge, companies can ensure that outputs meet quality and regulatory standards.

Supply chain teams are using generative AI for scenario planning, where the model explores what-if situations based on dynamic inputs like weather disruptions or geopolitical risks. These models don’t just forecast; they help teams simulate decisions and understand downstream effects in a conversational, visual format.

In HR, generative AI is transforming knowledge management and employee engagement. Internal chatbots can now answer policy questions, recommend courses, and provide real-time feedback on performance documentation. More significantly, generative AI is being used to develop adaptive learning experiences. Instead of static training manuals, employees receive AI-generated microlearning tailored to their roles and knowledge gaps.

This is one of the most promising areas where generative AI benefits for business can be seen clearly. By automating onboarding and reskilling at scale, organizations can significantly improve workforce agility. And when integrated with digital adaption platforms, this guidance becomes even more powerful, delivering contextual help exactly where and when it’s needed.

The first major architectural decision is how your enterprise engages with foundation models. There are three primary strategies: consuming pre-built APIs from vendors like OpenAI, fine-tuning open-source models for internal use, or building proprietary models from scratch. Each path comes with trade-offs in control, cost, compliance, and performance.

Most organizations are opting for fine-tuning or prompt engineering over full model training. This allows them to customize behavior without incurring the resource demands of training from raw data. Fine-tuned models can be aligned with tone, terminology, and compliance needs, which is critical in regulated industries like finance or healthcare.

One of the limitations of generative models is their static knowledge cutoff. To overcome this, companies are integrating Retrieval Augmented Generation pipelines, which combine LLMs with vector databases like Pinecone or Weaviate. These databases store embeddings of internal documents, allowing the model to “read” relevant content before generating responses. This dramatically improves the relevance and accuracy of AI outputs in real business contexts.

Additionally, prompt chaining and multi-step workflows are becoming standard. Instead of a single prompt producing a single output, advanced use cases involve sequences where one AI task feeds into another. For example, summarizing a meeting transcript, drafting action items, and scheduling follow-ups can be handled in a single workflow powered by generative logic.

Deploying generative AI at scale requires rigorous operational practices. This emerging discipline is often called LLMOps, and it includes tools and practices for monitoring output quality, preventing hallucinations, and logging prompt interactions. Enterprises must think seriously about prompt versioning, performance analytics, and feedback loops from users to continuously improve system behavior.

Security is another crucial concern. Prompts and generated content may contain sensitive information, so encryption, access control, and data masking must be baked into the architecture. The governance of model behavior is not optional; it is a precondition for safe and sustainable generative AI use.

Generative AI operates in a rapidly evolving legal and regulatory environment. The EU AI Act, for example, introduces strict requirements around transparency, risk categorization, and human oversight. In the United States, NIST’s AI Risk Management Framework provides guidance on accountability and model auditing. Every business deploying generative AI must stay ahead of these frameworks or risk penalties and reputational damage.

Internally, this means building cross-functional AI governance councils. These teams bring together legal, IT, HR, and business stakeholders to define usage policies, approval workflows, and compliance procedures. Having clear guidelines for prompt design, content validation, and model usage is no longer optional; it is a strategic necessity.

Enterprises must also address intellectual property rights, especially when AI-generated content is used externally. From marketing assets to legal documents, it is vital to know whether the model output is truly “owned” by the business, and whether it complies with fair use and copyright law.

Beyond compliance, the ethical risks are significant. Bias in training data can lead to discriminatory outputs. Hallucinations can introduce factual inaccuracies into customer-facing communication. Enterprises must test for these edge cases continuously and develop red-teaming practices to probe model weaknesses. Responsible use of generative AI is not a one-time audit; it is an ongoing, iterative process.

In my work with enterprise clients, one of the most powerful shifts I’ve witnessed is how generative AI is transitioning from a tool for automation to a partner in co-creation. Unlike traditional automation, which simply removes repetitive tasks, generative AI invites us to rethink how work itself is structured. It becomes a collaborator that assists in generating ideas, drafting documents, exploring options, and challenging assumptions.

This shift requires businesses to rethink their operating model. We are seeing the emergence of new hybrid roles, where domain experts partner with AI to deliver better outcomes. For example, legal professionals are not just reviewing contracts but co-authoring them with AI. Marketers are not just approving content but iterating on ideas with AI suggestions. These interactions are not about replacement but amplification.

To support this new way of working, organizations must foster a culture of experimentation. Leaders need to create safe spaces for teams to explore generative tools without fear of failure. The most innovative teams I’ve seen are those that treat AI not as a black box, but as a design partner. This means investing in training, creating sandbox environments, and encouraging bottom-up experimentation across departments.

Generative AI also plays a growing role in decision support. Whether it's generating insights from customer feedback, simulating scenarios in supply chain management, or summarizing compliance reports, AI can augment decision quality and speed. But to truly unlock this benefit, we must integrate AI outputs into workflows, not leave them isolated.

Decision-making is often a social and political process within large organizations. AI tools can contribute valuable inputs, but without the right context, they risk being misunderstood or ignored. Therefore, it’s essential to build interfaces where AI-generated content can be questioned, validated, and integrated into team deliberations. This is where adoption platforms and guided workflows begin to make a tangible impact.

Across industries, a common thread has emerged. The majority of enterprise AI initiatives fail to scale not because the models are inaccurate or the use cases are poorly defined, but because employees simply do not use the tools as intended. In other words, the bottleneck is not in the model, but in the adoption.

Generative AI introduces new user paradigms. Prompting, validating AI outputs, and understanding how to guide a model toward better performance are skills that most employees have never been trained in. Unlike traditional software, which often has a fixed interface and behavior, generative tools are probabilistic and adaptive. This creates a steep learning curve, even for otherwise tech-savvy users.

Digital adoption platforms (DAPs) are crucial to solving this problem. These platforms provide contextual help, embedded walkthroughs, and just-in-time training directly within enterprise applications. For example, if a sales team is working within a CRM that now includes an AI assistant, a DAP can guide them through the process of using the assistant effectively, correcting mistakes, and interpreting results.

A key factor in the success of generative AI for business is not just the capability of the models themselves, but whether users can understand and interact with them effectively. Digital Adoption Platforms have proven to be especially valuable in this area. Their non-intrusive, in-context support models help users onboard to AI features directly within the flow of work. This includes teaching employees how to write effective prompts, explaining system outputs, and reinforcing responsible use policies.

Instead of relying on external training portals or static documentation, DAPs provide dynamic and contextual learning experiences. For employees who are engaging with AI systems in real time, the presence of relevant, embedded guidance can significantly boost confidence, reduce errors, and encourage experimentation.

When integrated into a broader AI transformation strategy, DAPs can bridge the common gap between technical implementation and user readiness. This alignment is often where enterprise AI initiatives succeed or fail, and forward-thinking organizations are beginning to treat it as a strategic priority.

If your organization is serious about generative AI, then internal upskilling must be on your roadmap. Generative AI for business is not just a technical investment. It is a human capital strategy. One of the most important capabilities employees can develop is prompt engineering. Knowing how to ask the right questions, structure context, and guide outputs is emerging as a high-value business skill.

In many cases, we’re seeing the rise of prompt libraries, internal documentation of best practices, and even prompt review boards. These mechanisms are necessary to share knowledge across teams and ensure consistent quality in AI outputs. In parallel, companies should create AI literacy programs that go beyond the basics. These programs should cover model limitations, ethical considerations, and real-world use case design.

A well-structured generative AI for business courses should be multi-tiered. It should start with executive briefings focused on governance and strategy, move into hands-on labs for business users, and provide technical exploration for IT and data teams. More importantly, it should be aligned with real use cases inside your organization, not generic examples from the internet.

To scale this effort, many enterprises are forming internal centers of excellence (CoEs) for generative AI. These CoEs act as both technical stewards and business translators. They help departments define use cases, assess risk, choose tools, and monitor performance. But their most important function is change management. They help teams transition from AI awareness to AI competency.

These CoEs often include members from IT, L&D, legal, and business units. They are not just about centralizing expertise, but about democratizing AI knowledge across the organization. This structure also enables faster feedback loops. When a team in marketing hits a roadblock, they can escalate it to the CoE, which can then update training materials, refine prompt guidance, or adjust system settings.

By treating generative AI fluency as a core organizational capability, rather than a niche technical skill, companies can accelerate both experimentation and enterprise value realization.

We are currently in a transitional phase. Many companies are running generative AI pilots in isolated departments. While this is a necessary step, it cannot be the endpoint. The organizations that will lead in the next decade are those that think in terms of AI-native architecture. This means reimagining how data, systems, workflows, and people come together in a generative-first environment.

AI-native organizations design processes that assume a copilot will be involved. They optimize knowledge systems to support LLMs. They build governance processes that are dynamic and iterative. And they prioritize real-time guidance and training to make sure their people can keep up with the pace of change.

As models become more multimodal, they will interact with documents, databases, images, and speech in a unified way. The next generation of AI tools will not just answer questions or generate drafts. They will act. Agentic AI systems are already emerging. These systems can perform tasks autonomously, like scheduling meetings, booking resources, generating reports, or initiating follow-ups.

Integrating these systems safely into enterprise environments will require robust API governance, permission controls, and human-in-the-loop safeguards. But the potential upside is huge. Imagine an AI agent that handles 80 percent of routine project management tasks or synthesizes weekly executive briefings from raw data. That is where we are heading.

Generative AI is more than a trend. It is a new capability layer in the modern enterprise. It changes how we produce value, how we engage customers, and how we empower employees. But this transformation will not happen automatically. It will require thoughtful infrastructure, rigorous governance, and above all, a relentless focus on human-centered adoption.

In my experience, the companies that succeed with generative AI for business are those that treat it as both a technical and organizational transformation. They do not chase shiny tools. They build capability. They create internal expertise. They invest in platforms that help their people succeed.

For generative AI for business leaders, the message is clear. This is your moment to lead. Move beyond automation. Design for innovation. Invest in your people as much as your platforms. The future belongs to those who can build systems that are not only intelligent, but usable, inclusive, and adaptable.

As we've explored throughout this article, the real promise of generative AI for business is not just in the sophistication of the models, but in the ability of your people to use them meaningfully, responsibly, and consistently. That last part, consistently, is where most organizations hit a wall. Because even when the technology is in place, and the use cases are clear, adoption is still a deeply human process. And that’s exactly where VisualSP makes the difference.

At VisualSP, we’ve spent years solving this challenge across hundreds of enterprise environments. Our platform is designed to make AI adoption simple, scalable, and contextual. Whether your teams are interacting with CRMs or other internal tools, VisualSP overlays just-in-time support directly inside those applications. No switching tabs, no searching knowledge bases, only real help, exactly where and when it’s needed.

One of the things I’m most excited about is how our AI Assistant reduces the overhead for IT and business teams. You don’t need to manually build every walkthrough or guidance prompt. VisualSP’s built-in AI assistant helps you generate in-app support content faster than ever, including prompt templates, policy reminders, and step-by-step walkthroughs for new AI-enabled workflows. This means your users aren’t just aware of the tools available to them, they’re confidently using them.

We’ve helped over two million users. From global enterprises to public sector teams, the results are the same: faster adoption, better productivity, and smarter use of enterprise systems. And we do it without compromising security. Your data stays private. Your teams stay empowered.

If you’re serious about unlocking the true business value of generative AI, I encourage you to see how VisualSP can help you bridge the last-mile gap between AI capability and user adoption.

Get a personalized demo of VisualSP and see how we make generative AI work at scale, right inside the flow of work.

Fuel Employee Success

Stop Pissing Off Your Software Users! There's a Better Way...

VisualSP makes in-app guidance simple.